I woke up this morning to a text from my ISP, “There is an outage in your area, we are working to resolve the issue”

I laugh, this is what I live for! Almost all of my services are self hosted, I’m barely going to notice the difference!

Wrong.

When the internet went out, the power also went out for a few seconds. Four small computers host all of my services. Of those, one shutdown, and three rebooted. Of the three that ugly rebooted some services came back online, some didn’t.

30 minutes later, ISP sends out the text that service is back online.

2 hours later I’m still finding down services on my network.

Moral of the story: A UPS has moved to the top of the shopping list! Any suggestions??

When you are bored, backup a VM then hard kill it and see if it manage to restart properly.

Software should be able to recover from that.

If it doesn’t, troubleshoot.That reminds me of Netflix’s Chaos Monkey (basically in office hours this tool will randomly kill stuff).

When I built my home server this is what I did with all VMs. Learned how to change the start up delay time in esxi and ensured everything came back online with no issues from a cold built.

Rip VMware.

While I appreciate the sentiment, most traditional VMs do not like to have their power killed (especially non-journaling file systems).

Even crash consistent applications can be impacted if the underlying host fs is affected by power loss.

I do think that backup are a valid suggestion here, provided that the backup is an interrupted by a power surge or loss.

Edit: even journaling file systems aren’t a magic bullet. I’ve had an ext4 fs get corrupted when IO was interrupted by power loss. I get the down votes for mentioning non-journaling FS, but seriously folks, use the swiss cheese method of protecting your stuff… backups, redundant power/UPS, documented/automated installation/configuration.

most traditional VMs do not like to have their power killed (especially non-journaling file systems).

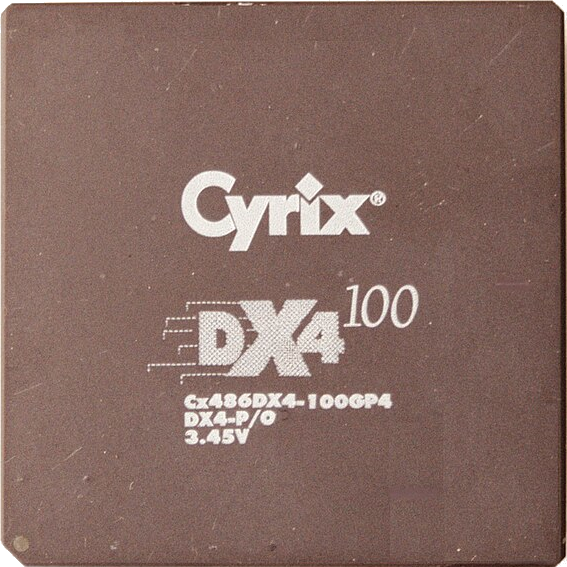

Why are you using a non-journaling file system in 2024 when those were common 10+ years ago?

It’s been a while since a power cut affected my services, is this why?

I remember having to troubleshoot mysql corruption following abrupt power loss, is this no longer a thing?

Databases shouldn’t even need a journaling filesystem, they usually pay attention to when to use fsync and fdatasync.

In fact journaling filesystems basically use the same mechanisms as databases only for filesystem metadata.

Or even better use something like ZFS with CoW that can’t corrupt on power loss

and don’t fuck with sync writes

I would still consider that generation of filesystem to be effort to use while regular journaling filesystems have been so ubiquitous that you need to invest effort to avoid using one.

It was supported and the default out of the box when I installed my OS

Maybe on some distros that is the case if you install a recent version but to get a non-journaling filesystem you literally have to partition manually to avoid using one on any distro that is still supported today and meant for full sized PCs (as opposed to embedded devices).

Are you talking about Linux distros? What manual partitioning has to occur?

Your system should be fine after a hard kill. If its not stop using it as that’s going to be a problem down the road.

Though I wonder if even besides adding an uninterruptible power supply (UPS) (writing acronym out for anyone else who would’ve had to Google it), this might be a useful exercise recovering from outages in general. This is coming from someone who hasn’t actually done any self hosting of my own, but you saying you’re still finding down services reminds me of when I learned the benefit of testing system backups as part of making them.

I was lucky in that I didn’t have any data loss, but restoring from my backup took a lot more manual work than I’d anticipated, and it came at an awkward time. Since then, my restoring from backup process is way more streamlined.

IMHO you’re optimizing for the wrong thing. 100% availability is not something that’s attainable for a self-hoster without driving yourself crazy.

Like the other comment suggested, I’d rather invest time into having machines and services come back up smoothly after reboots.

That being said, an UPS may be relevant to your setup in other ways. For example it can allow a parity RAID array to shut down cleanly and reduce the risk of write holes. But that’s just one example, and an UPS is just one solution for that (others being ZFS, or non-parity RAID, or SAS/SATA controller cards with built-in battery and/or hardware RAID support etc.)

I agree that 99.999% uptime is a pipedream for most home labs, but I personally think a UPS is worth it, if only to give yourself the option to gracefully shut down systems in the event of a power outage.

Eventually, I’ll get a working script that checks the battery backup for mains power loss and handle the graceful shutdown for me, but right now that extra 10-15 minutes of battery backup is enough for a manual effort.

Some of the nicer models of UPS have little servers built in for remote management, and also communicate to their tenants via USB or Serial or Emergency Power Off (EPO) Port.

You shouldn’t have to write a script that polls battery status, the UPS should tell you. Be told, don’t ask.

The problem is that for most self-hosters, they would be working and unavailable to do a graceful shutdown in any case even if they had a UPS unless they work fully from home with 0 meetings. If they are sleeping or at work, (>70% of the day for many or most) then it is useless without graceful shutdown scripts.

I just don’t worry about it and go through the 10 minute startup and verification process if anything happens. Easier to use an uptime monitor like uptimekuma and log checker like dozzle for all of your services available locally and remotely and see if anything failed to come back up.

A general tip on buying UPSes: look for second hand ones - people often don’t realise you can just replace the battery in them (or can’t be bothered) so you can get fancier/larger ones very cheap.

Also, a larger capacity one is better, and it’s likely you’ll find a secondhand one with more capacity/features for a similar price.

Why? If the power has gone out there are very few situations (I can’t actually think of any except brownouts or other transient power loss) where it would be useful to power my server for much longer than it takes to shut down safely.

Longer means you’re more likely to be able to ride out a power cut, and gives you more options if you want/need to complete something more involved than saving and shutting down.

Did the services fail to come back due to the bad reboot, or would they have failed to come back on a clean reboot? I ugly reboot my stuff all the time, and unless the hardware fails, i can be pretty sure its all going to come back. Getting your stuff to survive reboot is probably a better spend of effort.

I didn’t mean to imply that Services actually broke. Only that they didn’t come back after a reboot. A clean reboot may have caused some of the same issues because, I’m learning as I go. Some services are restarted by systemctl, some by cron, some…manual. This is certainly a wake up call that I need standardize and simplify the way the services are started.

We’ve all.committed that sin before. Its better to rely on it surviving the reboot than to try prevent the reboot.

Also worth looking into some form of uptime monitoring software. When something goes down, you want to know about it asap.

And documenting your setup never hurts :D

On the uptime monitoring I’ve been quite happy with uptime kuma, but… If you put it on the same host that’s down… Well, that’s not going to work :p (I nearly made that mistake)

It’s not the most detailed thing, but I just use a free account on cron-job.org to send a head request every two minutes to a few services that are reachable from the internet (either just their homepage or some ping endpoint in the API) and then used the status page functionality to have a simple second status page on a third party server.

You can do a bit more on their paid tier, but so far I didn’t need that.

On the other hand, you could try if a free tier/cheap small vps on one of the many cloud providers is sufficient for an uptime Kuma installation. Just don’t use the same cloud provider as all other of your services run in.

Yeah an unclean reboot shouldn’t break anything as long as it wasn’t doing anything when it went down. I’ve never had any issues when I have to crash a computer unless it was stuck doing an update.

I’m a big fan of running home stuff on old laptops for this reason. Most UPSs give you a few minutes to shut down, laptops (depending on what you run) could give you plenty of extra run time and plenty of margin for a shutdown contingency.

Small, good value, quiet, power efficient, built in battery backup and server terminal. Laptops are dope for home labs!

when you say some services on your network are you talking about machines or softwares?

for machines yes ups makes sense for softwares writing some scripts to run on start up should be enough another alternative can be setting up wake on Lan that way you can bring all up again wherever you are

Two pitfalls I had that you can avoid:

- look at efficiency. It’s not always neglible, was like 40% of my energy usage because I oversized the UPS. The efficiency is calculated from top power the UPS can supply. 96% efficient 3kW UPS eats 4% of 3kW, 120 watts, even if the load you connected is much smaller than 3kW

- look at noise level. Mine was loud almost like a rack server, because of all the fans.

I replaced that noisy, power hungry beast with a small quiet 900W APC and I couldn’t be happier

I use a laptop and external jbod covered with a low power ups. As other said, the point is to bridge powergaps now long term working powerless. I live in the countrisied, so small powergaps happens specially when my photovoltaic don’t produce (no, i have no battery accumulators, too expensive)

Exact same thing happened to me the other day. Like exactly. Maybe we live in the same area.

UPS with usb allows you to configure a script to properly shutdown your server when a power outage happens and the UPS battery is about to run out.

This is why I gave up self hosting. It’s great when it works but it just becomes an expensive second job. I still have Plex/Jellyfin etc but for emails and password vaults I just pay for external services.

if you don’t want it to feel like a second job, you could always quit your first!

I could have the best self hosted setup… living in a van, down by the river!

I like to host as many services as possible and I’m fine with it being a second job at times since this is my main hobby, but I actually agree with you on your examples. The three things I won’t self-host are:

-

Emails - I am not willing to put in the effort on this. Plus, my ISP blocks those ports so I’d already be into using a VPS even if I wanted to host this. I’d rather just pay someone else, like Proton.

-

Password manager - I actually did self-host Bitwarden for a long time, but after thinking about it for a while, I decided to take the pay someone else approach here too. I’m pretty sure I’m doing everything correctly, but I’m not a security expert. I’d rather be 100% sure my passwords are in safe hands rather than be 95% sure that I’m doing everything right on this one.

-

Lemmy - I’ve heard about (luckily never seen) CSAM attacks on Lemmy/Kbin and will not risk that kind of content being downloaded because I’m federated with an instance dealing with those attacks. I’m happy to throw a couple bucks at lemmy.world’s Patreon and let them handle that.

-

Laptops

My favorite part about using an old laptop as a 24/7/365 plugged-in server is the anticipation of when the lithium battery will explode from overcharging.

“overcharging” doesn’t exist. There are two circuits preventing the battery from being charged beyond 100%: the usual battery controller, and normally another protection circuit in the battery cell. Sitting at 100% and being warm all the time is enough for a significant hit on the cell’s longetivity though. An easy measure that is possible on many laptops (like thinkpads) is to set a threshold where to stop charging at. Ideal for longetivity is around 60%. Also ensure good cooling.

Sorry for being pedantic, but as an electricial engineer it annoys me that there’s more wrong information about li-po/-ion batteries, chargers and even usb wall warts and usb power delivery than there’s correct information.

Isn’t dendrite formation and the shorts they can cause a much bigger concern when dealing with old batteries that are being charged 24/7? Asking a genuine question here, so please don’t shoot me if I’m wrong. 🙂 I’d love to hear more about the most common failure modes and causes for li-po/ion batteries.

Those are symptoms of sitting at that operation point permanently, and they are a of course a concern. What I’m after is that people think that energy gets put in to the battery, i.e. it gets charged, as long as a “charger” is connected to the device (hence terms like “overcharged”). But that is not true, because what is commonly referred to as “charger” is no charger. It is just a power supply and has literally zero say in if, how and when the battery gets charged. It only gets charged if the charge controller in the device decides to do that now, and if the protection circuit allows it. And that is designed to only happen if the battery is not full. When it is full, nothing more happens, no currents flow in+out of the battery anymore. There’s no damage due to being charged all the time, because no device keeps on pumping energy into the cell if it is full.

There is however damage from sitting (!) at 100% charge with medium to high heat. That happens indipendently from a power supply being connected to the device or not. You can just as well damage your cells by charging them to 100% and storing them in a warm place while topping them of once in a while. This is why you want to have them at lower room temperature and at ~60%, no matter if a device/“charger” is connected or not.

(Of course keeping a battery at 60% all the time defeats the purpose of the battery. So just try to keep it cool, charged to >20% and <80% most of the time, and you’re fine)

For many li-ion laptop batteries, the manufacturer’s configuration of a 100 % charge is pretty much equivalent to overcharging. I’ve seen many laptops over the years with swollen batteries, almost all of them had been plugged in all the time, with the battery kept at 100 % charge.

As an electrical engineer you should know that technically there is no 100 % charge for batteries. A battery can more or less safely be charged up to to a certain voltage. The 100 % charge point is something the manufacturer can choose (of course within limits depending on cell chemistry). A manufacturer can choose a higher cell voltage than another to gain a little more capacity, at the cost of longterm reliability. There are manufacturers that choose a cell voltage of 4250 mV and while that’s possible and works okay if charged only occasionally, if plugged in all the time, this pretty much ensures killing the batteries rather quickly. I would certainly call that overcharging.

Since you already mentioned charging thresholds, I just want to say, anyone considering using a laptop as a server should absolutely make use of this feature and limit the maximum charge.

If you say it quickly enough it may sound plausible to some but this is not how battery technology works, as explained by @skilltheamps@feddit.de

-

UPS, good idea.

-

backups too.

-

Could also be a good opportunity to add a service monitor like Uptime Kuma. That way you know what services are still down once things come back online with less manual discovery on your part.